No.

When you need serious power for an internet service, you generally

colocate a server. Colocation is where you

own the server hardware, and you

rent space for it in a datacenter. This means, at minimum, you have

physical access to your server during datacenters business hours, and are free to do upgrades as you can afford them. Then you can get seriously dedicated, with

dedicated VMs.

Example configuration:

On the server you can install a

Hypervisor, such as

OpenVZ 7 on VZLinux, or

Xen on CentOS, or ArchLinux, or if you are dead serious

Xen on Gentoo or LFS.

Hypervisor of the

colocated dedicated server shall host several VMs, each with a special purpose:

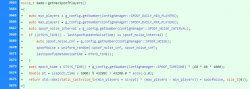

- Gameworld VM: This VM will run your TFS daemon and have direct access to it's own physical network card. It will also have a virtual card with a 172 IP.

- Database VM: This VM will get raw physical access to it's own SSD which will host your PerconaDB. It will have a virtual network card only with a 172 range IP.

- Webserver VM: This VM will host your nginx, PHP, NodeJS, and other publicly facing services. It will have will have direct physical access to a network card, as well as a virtual network card with a 172 range IP.

- Monitoring VM: This VM will host your Nagios or Zabbix installation, which will monitor relevant datapoints to ascertain the current health of the other servers. It will use a virtual network card on the 172 range to communicate with the others.

When you need access to one of the VMs that has

no public route, you use SSH tunneling. Gameworld and Webserver talk to Database over 172 local virtual network. You can allocate resources to each VM. So if you have 16 cores available, you can give 6 physical cores to Gameworld, 6 physical cores to Database, and webserver and monitor vms will share access to remaining 4 cores.