Ok guys, let’s actually do something. I know OP didn’t want us to write any code, but we can do it anyway.

So here’s my proposal for a new map format. (Credits to

@jo3bingham for the idea of dividing map by areas. My format is based on it, but goes a little further.)

First of all, why? I know many of you already know why we need a new format, but I’ll quickly summarize it.

OTBM:

+fast

+supported everywhere

-binary file = not git friendly

Cipsoft format:

+many small text files = git friendly

-slow

-divided into sectors, which doesn’t really make sense (you can see in git client what file changed, but you don’t know what part of the map it is)

So this leads us to a format that can eliminate all these disadvantages, but keep all advantages (if combined with some other tools). Now, how it can look like:

A map is a directory, just like in Cipsoft format. But inside it, there can be other directories, and inside these directories another bunch of directories, and so on, and so on. Every directory represents one area (I’m not sure if „area” name is the best here, maybe it can be „region” or something else) on the map. An area is just a part of the map, which can be marked in map editor (just like houses now). And these areas can be nested infinitely (well, almost, because minimum size of an area is 1 sqm).

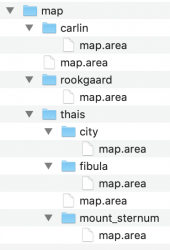

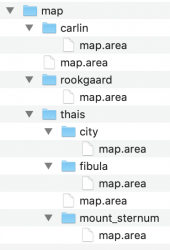

For example, let’s say we have a real/global Cipsoft map. In map editor, we can mark areas like: Rookgaard, Thais, Carlin, … . And then we can mark subareas, for example in Thais area we can mark: city, Mount Sternum, Fibula, … . If we save this map, its directory will look like this:

And now, if you change something on Fibula, save the map and open a git client, everything will be clear as soon as you look at the file path: map/thais/fibula/map.area.

There’s one more thing to explain here: what are these other map.area files for? They basically contain all sqms that don’t match anywhere else. So if an sqm doesn’t belong to any area, it’ll be placed in map/map.area file. But if an sqm belongs to the Thais area, but doesn’t belong to any Thais subarea, it’ll be placed in map/thais/map.area. What map.area file looks like? Probably similar to Cipsoft .sec files, one line for one sqm. The exact syntax is yet to be determined.

This format already eliminates two of three disadvantages of current formats, but it’s still slow. That’s why we need another binary format, and a „compiler”. So just as we compile the code, we’ll compile the map directory into a binary file. There are (at least) two ways of doing it, and our game server can quite easily support both.

- We just start the server, and it’ll compile the map before loading it. This may look like no speed change, but I’ll explain it later.

- We use a CI/CD pipeline, which compiles both server and map for us and deploys only binaries. Then the server loads this binary at startup.

How the server can handle both cases easily? It checks if map directory exists AND if map binary exists. Now, there are 4 possibilities:

- Both don’t exist - server prints an error and exits

- Only binary - server loads binary

- Only directory - server compiles it and loads newly created binary

- Both exist - server calculates directory checksum (I googled it, it should be possible to do it based on all files checksums) and compares it with a checksum inside binary (map compiler must calculate it and save in the binary during compilation to make it work), if it’s the same, server loads the old binary, if not, it compiles the directory and loads a new binary

So in first case without CI/CD, it’s quite fast, because the server only recompiles the map if it changed, and nobody changes server map everyday. But it still has to calculate checksums everytime it starts. Maybe there’s better way to do it? Checking modification time? Or maybe we can use git hooks to check checksums and recompile the map only when some files changed?

Now, what about spawns and houses? I personally think they should be included inside the map directory, because they’re just a part of the map. I think they can both use the same area system, so we can cover every map.area file with corresponding spawns and houses files. But we have to assign each house to a city, so maybe a solution to this is to allow adding some parameters to areas? This way you can create a Thais city in map editor and assign it to „thais” area. Now all houses inside this area will be assigned to Thais city automatically. There are two ways of doing it, either adding a new file in each area directory, containing some parameters, or adding an area name to each city in a cities config file. Where will cities data be stored? Probably the best option is to add a global map config file in root directory. It can use json/yml/toml format and contain some data like: author, version, size, client version, cities, and anything else we need.