You can set list of proxies inJust a passing thought - maybe we can receive the "login proxy" list over http when the client is opened - that'd keep me happy haha

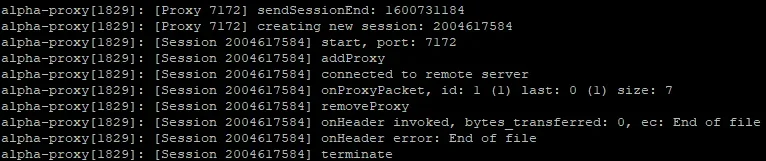

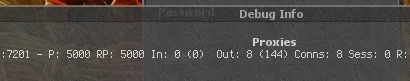

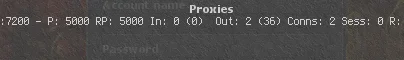

init.lua, login to account (with OTCv8 proxy system, it will pick 1 working proxy to send login packet), get new list of proxies in login packet, call g_proxy.clear() to remove all proxies (OTCv8 does it by default, Mehah does not) and add new proxies to client. It will use new proxies to connect to OTS.How do you use multiple login proxies for failover without proxy system? Using some external load balancer with HTTP protocol to login?I didn't want to hard code anything, since I also use multiple login proxies for failover, the same way CipSoft does it, just in case one of them goes down.

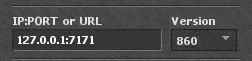

From first post description, it looks like you must put 1 of haproxy IPs as server IP in client, set login port to 7100 and it will connect using it (no failover) or you login to account using IP of dedic with OTS, which makes this system absolutely useless. If attacker can get real OTS IP, he can DDoS it and OTCv8 proxy becomes useless.