Night Wolf

I don't bite.

- Joined

- Feb 10, 2008

- Messages

- 609

- Solutions

- 8

- Reaction score

- 970

- Location

- Spain

- GitHub

- andersonfaaria

Hi everyone,

Latest updates:

Just to give a proper explanation on how this works because many people is sending messages in my discord fearing a new technology that they just don't understand:

- GANs are basically an algorithm that takes several days to be trained on and they do a simple thing: they receive a random number and spits what he knows about the trainned files. The better the results (more alike the real data), the better is the network to generate new content based on what it saw.

So if you're a spriter and you feel that somehow I will mess your work, you're wrong. This will mainly be used to generate new concepts and 'base' sprites that are copyright free. If someone wants to hire your services, it's not a GAN that will be in the way as it only do things that are somewhat similar to what it has seen before. It won't draw naruto/pokemon sprites since it only saw swords from tibiawiki.

- After trainning with swords, I'll be separating the pickle file (basically where it stores the 'learning') and start trainning with other sprites to understand how the transition will work (from swords to clubs for example). I also want to know how different it would be to convert a sword to a club in comparison to trainning with clubs from scratch.

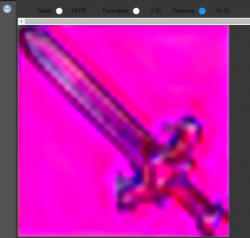

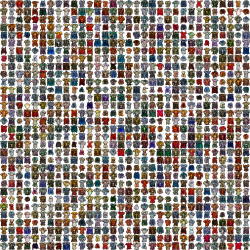

I'll keep you all updated on the progress. Below is the last generated batch. It has mastered a few tibia sprites already so I'm pretty close to ask it to draw random ones and check the results.

Unfortunately it returns a lot of noise in the image also (from magenta variations to including tunes of pink in the blade). I'm still searching for a way to quick clean those images and try to avoid having to perform mass manual editions in the result sprites. It is possible that with more days of trainning it will correct those noises, but that is just theoretically

Latest updates:

- I've found out that is better to train the network to be an expert in a certain things instead of training it to be able to draw both swords and other weapons. We have less glitchy sprites and the results becomes more reasonable even though they are more repetitive because lack of data.

- I've added some custom free-for-use sprites and others that people donated me to this project. The only thing is that I have added them on top of the current learned file so network is still kind of adapting to it. The results seem pretty good so far.

- I've started to train from scratch with 32x32 using pink background. In my head it is easier to separate later the alpha channel (transparency) computing the magenta pixels rather than identifying the object among a black background and cutting with 1 pixel distance (AA).

Just to give a proper explanation on how this works because many people is sending messages in my discord fearing a new technology that they just don't understand:

- GANs are basically an algorithm that takes several days to be trained on and they do a simple thing: they receive a random number and spits what he knows about the trainned files. The better the results (more alike the real data), the better is the network to generate new content based on what it saw.

So if you're a spriter and you feel that somehow I will mess your work, you're wrong. This will mainly be used to generate new concepts and 'base' sprites that are copyright free. If someone wants to hire your services, it's not a GAN that will be in the way as it only do things that are somewhat similar to what it has seen before. It won't draw naruto/pokemon sprites since it only saw swords from tibiawiki.

- After trainning with swords, I'll be separating the pickle file (basically where it stores the 'learning') and start trainning with other sprites to understand how the transition will work (from swords to clubs for example). I also want to know how different it would be to convert a sword to a club in comparison to trainning with clubs from scratch.

I'll keep you all updated on the progress. Below is the last generated batch. It has mastered a few tibia sprites already so I'm pretty close to ask it to draw random ones and check the results.

Unfortunately it returns a lot of noise in the image also (from magenta variations to including tunes of pink in the blade). I'm still searching for a way to quick clean those images and try to avoid having to perform mass manual editions in the result sprites. It is possible that with more days of trainning it will correct those noises, but that is just theoretically