Night Wolf

I don't bite.

- Joined

- Feb 10, 2008

- Messages

- 609

- Solutions

- 8

- Reaction score

- 969

- Location

- Spain

- GitHub

- andersonfaaria

Dear otland,

As some of you were following in my initial thread Swords Artificially Generated (GANs), the future is here!

Today I come to share a technology that was recently released, we are talking about articles that were published less than 2 months ago!!

I have put up this colab notbook since I know is not everyone that has a cool gpu available at home. With this you'll be able to run it in the cloud without paying anything.

Pixel Weapons Generator - Google Colaboratory

I hope that this will be a game changer for people who always wanted to have custom sprites but didn't had the an initial capital the invest. I also hope this tool can be used to improve some initiatives like OpenTibia Sprite Pack

For now I'll be only releasing the Pixel Weapons Generator, it took me around ~1 month and half to finetune learning rates and hyperparameters, and actually study A LOT of the theory behind it.

This doesn't generate end product weapons, but you can literaly control the sliders to edit layouts and find cool models to start with. After finding one that you like, you can try to improve it a little bit changing some of the layers and increasing distance in certain layers to update a lot of traces such as color, element (in some cases, limited to fire and ice) and geometric details (size, width, weight, hilt, rotation and so on). Due the lack of data not every change will return a good product, but with some manual intervention in background and a few noise reduction it actually can be utilized.

A few examples generated:

If you want to know more about GANs, check out this video of Computerphile channel

And also this video about the tool you're about to run: GANSpace. It will give you an idea on how to actually control the parameters you want.

If you never used Google Colab, then the first steps are (I'll continue the instructions in a post because I've reached the limit of 10 images)

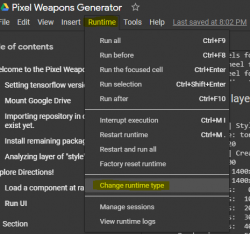

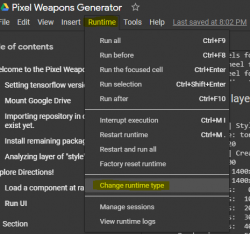

1) Make sure your runtime type is set to GPU. This won't run in CPU mode because of cuda and tensorflow libraries.

2) Just go to each cell and press the play buttom in the corner. Make sure to wait it finishes the execution until you go to the next one:

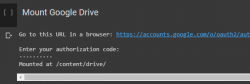

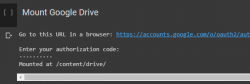

3) In order for it to run, it requires to download my repository and install in your google drive. To do so you need to run this cell, click in the link, enter with your google account, copy the authorization code, paste in the input window that will apear and press enter.

4) After running everything you'll reach the "hardest" parts which is finding good configurations to your model.

The first one is the component, in this code the component is set to be a random number between 0-20, you can actually set it to a constant value by double clicking the title of the cell.

The second one is when you're running the UI, it will go to a random seed with truncation 0.7. Make sure to read all text and instructions that is in the colab, they will be your helpers throughout.

5) Play with seed and truncation first. They are the first control you'll be wanting to start play with. Usually good values of truncation are between 0.5 and 1.0. Increasing further to 1.0 shouldn't change anything, but sometimes it does depending on what component you're at.

Once you find a good model, you can change some details of it by choosing the layer you want to edit and increasing the distance (which is multiplied by scale). Usually I start with scale 10.0 and slide from -10 to +10 in distance between each layer (0-1, 1-2, 2-3, 3-4..) to actually understand what each one changes in the model. Once I have it defined (and named) I start changing what matters most to me.

Hope we make a good use of it, I'm still studying to see how this can be improved to achieve even better results.

All I ask two things:

As some of you were following in my initial thread Swords Artificially Generated (GANs), the future is here!

Today I come to share a technology that was recently released, we are talking about articles that were published less than 2 months ago!!

I have put up this colab notbook since I know is not everyone that has a cool gpu available at home. With this you'll be able to run it in the cloud without paying anything.

Pixel Weapons Generator - Google Colaboratory

I hope that this will be a game changer for people who always wanted to have custom sprites but didn't had the an initial capital the invest. I also hope this tool can be used to improve some initiatives like OpenTibia Sprite Pack

For now I'll be only releasing the Pixel Weapons Generator, it took me around ~1 month and half to finetune learning rates and hyperparameters, and actually study A LOT of the theory behind it.

This doesn't generate end product weapons, but you can literaly control the sliders to edit layouts and find cool models to start with. After finding one that you like, you can try to improve it a little bit changing some of the layers and increasing distance in certain layers to update a lot of traces such as color, element (in some cases, limited to fire and ice) and geometric details (size, width, weight, hilt, rotation and so on). Due the lack of data not every change will return a good product, but with some manual intervention in background and a few noise reduction it actually can be utilized.

A few examples generated:

If you want to know more about GANs, check out this video of Computerphile channel

And also this video about the tool you're about to run: GANSpace. It will give you an idea on how to actually control the parameters you want.

If you never used Google Colab, then the first steps are (I'll continue the instructions in a post because I've reached the limit of 10 images)

Post automatically merged:

1) Make sure your runtime type is set to GPU. This won't run in CPU mode because of cuda and tensorflow libraries.

2) Just go to each cell and press the play buttom in the corner. Make sure to wait it finishes the execution until you go to the next one:

3) In order for it to run, it requires to download my repository and install in your google drive. To do so you need to run this cell, click in the link, enter with your google account, copy the authorization code, paste in the input window that will apear and press enter.

4) After running everything you'll reach the "hardest" parts which is finding good configurations to your model.

The first one is the component, in this code the component is set to be a random number between 0-20, you can actually set it to a constant value by double clicking the title of the cell.

The second one is when you're running the UI, it will go to a random seed with truncation 0.7. Make sure to read all text and instructions that is in the colab, they will be your helpers throughout.

5) Play with seed and truncation first. They are the first control you'll be wanting to start play with. Usually good values of truncation are between 0.5 and 1.0. Increasing further to 1.0 shouldn't change anything, but sometimes it does depending on what component you're at.

Once you find a good model, you can change some details of it by choosing the layer you want to edit and increasing the distance (which is multiplied by scale). Usually I start with scale 10.0 and slide from -10 to +10 in distance between each layer (0-1, 1-2, 2-3, 3-4..) to actually understand what each one changes in the model. Once I have it defined (and named) I start changing what matters most to me.

Hope we make a good use of it, I'm still studying to see how this can be improved to achieve even better results.

All I ask two things:

- Make good use out of it

- If you find a way to massively remove the noise of the pink background, let me know.

Attachments

-

download (1).png2.2 KB · Views: 31 · VirusTotal

download (1).png2.2 KB · Views: 31 · VirusTotal

Last edited: