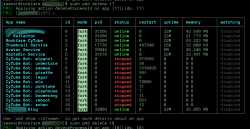

I'm extremely familiar with PM2. Here is a shot from when I was tearing down an install on one of my beloved personal servers.

View attachment 44759

That 22M is

twenty two months uptime. Server being retired because the metal she's on is running OVZ6, 2.6 kernel, CTs only.

PM2 has some failings I do not like. For example, the use case where you want it to run as root, and only as root. So that all, or perhaps a delegated group, users should be able to call and get info but only root should be able to make any sort of changes. They do not support this scenario despite a mind boggling

extreme level of demand for it, I'm sure I could go find at least 15 issues on their tracker referencing such. Instead a user will invoke it and it

spawns a new instance even though you know damn well one is already running. Nuisance.

Another is, as least last time I check and for several years now, if your app

spawns subshells or child processes the reported memory usage is

not correct.

That being said, I still find it extremely useful, but I think it's an inappropriate choice for the task you are proposing it for. TFS should be handled by

systemd just like her sister daemons

nginx and

mysql are. We could provide automated packaging tooling for common installation targets like

Ubuntu LTS. If a wrapper is needed to facilitate coredumps, it would be slipstreamed here.

NodeJS userland is complicated. Asking users who often have troubles compiling binaries from apps that provide wonderfully verbose CMake tooling that tells them exactly what packages are missing and they still need their hand held? That sounds like a match made in hell.