How's it going you amazing thing you @tarjei

I have some issue with coloring outfits, and I had to take care of some stuff irl :/

How's it going you amazing thing you @tarjei

You think thats a valid excuse?I have some issue with coloring outfits, and I had to take care of some stuff irl :/

NoYou think thats a valid excuse?

Also I would like to mention, if it wasn't mentioned before, that in options we have option to set fps limit for UI. After changing it from Max to 10 FPS my overall FPS goes up from 390 to 850.

Yeah, that will based on glGetIntegerv(GL_MAX_TEXTURE_SIZE, &size), and also there will be a few atlases technically. But literally, insead of SpriteManager, thing will asks our "AtlasManager" for got them, and they will resolve it. Plus Max sizes depends on your hardware & drivers. For example:Ad . 3 Will work if you make this atlas composed from few textures (there is max size for a texture) but even then if you bind entire texture this big GPU will choke

Yeah, that will based on glGetIntegerv(GL_MAX_TEXTURE_SIZE, &size), and also there will be a few atlases technically. But literally, insead of SpriteManager, thing will asks our "AtlasManager" for got them, and they will resolve it. Plus Max sizes depends on your hardware & drivers. For example:

==========================

GPU Intel(R) HD Graphics 4600

OpenGL 4.3.0 - Build 20.19.15.4444

MaxSize 16384

==========================

For example - Tibia 10.41 has 154624 32x32 sprites totally. near 10-15 "virtual texture atlases" will be enough and will have a nice performance. Better instead of using 154624+ Texture2D amount anyways

By the way is there a big change between optimized client you have implemented and this one that utilize Opengl 2?

Yeah, that is. It's currently optimized, but very dirty in code (fast fix code-style). Also, i am testing various variants of implementations of refactor code, for avoid same issues in future with our client and do the graphics profiling. Also, once it has been finished, i will make a pull-request to repository. Currently yeah, it's works, but that should be done before pull request:

1)Experiments with various things for processing our textures, (atlases, arrays, etc.) for optimize & perfomance.

2)"Clean" code refactoring without my dummy classes (structure looks uggly, and that is just for tests) + code commenting.

3) Re-work Lightview system... I am crying when look on this. That is not Light system... that is masochism.

(there should be PointLight, DirectionalLight and Ambient Light OpenGL API usage (them already have it) just with class for operating them)

4)I have already updated version of our glew library with fixes (glew 2.0+), but not only that! Imho nowadays, needless to keep clean OpenGL 2.0. Because OpenGL 3.0 has been released at 2008, and nowadays "old PC-s" is near 2009-2010 year (6 years old aged) already has OpenGL 3.0 GPU support. That is should not be a greater issue... Just for example, if you wanna play Crysis on Alliance Semi-Conductor 2mb RAM GPU... well...Time to little upgrade, nope? (Really, guys, laptop/pc with DX10, OpenGL 4.0 card costs really CHEAP, if serious, "old" Asus K53SV notebook (they not manufactured anymore), with DX11, OGL 4.5 costs on e-bay (check specially at post creation) near $175,00) But as i told above - "Old" PC's is not a Pentium 90 nowadays, and already supports OGL3 + has all req. for playing Tibia on OTClient. We dont need actually keep OpenGL 2.0 backwards compatibility.

Nice to see you here @edubart . Well i have a just few questions and little discuss about.Hello all, it's nice to see so much interest in optimizing otclient's graphics, as I've coded the majority of the code, I can point out what could be optimized and improved and some of the flaws and what you guys are missing.

1. Drawing Less Tiles

The current game map rendering architecture of otclient draws 18*14 = 252 tiles into a framebuffer, however only 15*11 = 165 tiles are visible on screen, so there is a great room for improvement here, 87 extra tiles are being drawn that are not visible, meaning that we could draw ~35% less. But there is a reason for it, 1 row at the top, 2 row at the bottom, 1 column at the left, 2 columns at the right of tiles first are drawn because when you walk you need to see where are you going at, but only when walking you need to see those extra tiles, they are discardable when you are not moving, even more depending on the direction of the walk you need to drawn only a row or a column, nevertheless otclient drawn all these extra rows and columns simple because nobody optimized that yet. Moreover we can't simple discard the bottom/right rows/columns without thinking the 64x64 sprites (trees, creatures 2x2). Therefore an algorithm that could discard those rows/columns of tiles and drawn them only when needed would greatly improve performance.

2. Drawing Even Less Tiles

There is more room for performance improvement, there a function in the map called isCompletelyCovered(), it's basically a function to check if a tile is being completely covered by another tile (meaning that we won't need to draw this covered tile). This function is working in average, however it's not smart enough, there are cases that is simple misses, and it doesn't even take the tiles dimensions into account.

3. Drawing creatures are not the problem

Many here saw that there is a great performance drop when drawing many creatures into the screen, indeed it really happens, however the main cause are not really creatures. The first problem is text drawing for creatures, it needs an algorithm with better caching, everytime a creature moves its name moves too and the engine needs to recalculate all the text glyphs positions, this could be improved by caching the text coords into a vertex array and just translating the vertex array instead of recalculating all again, in fact any moving text in otclient will consume more CPU, the greater the text the more you generally don't notice this because the UI text are static. The second cause is the battle list, which have some algorithms that work poorly when dealing with huge number of creatures. If you hide the creatures name and disable the battle module you will see that the FPS doesn't drop that much with many creatures.

4. Lights doesn't have the best implementation

As some noted the lights are drawn as big bubbles, meaning more lights more bubbles to draw, the bigger the light the bigger the bubble. When you have many lights the FPS will decrease a lot. This was not how I originally intended do implement lights, this algorithm was first introduced by @tarjei who sent me his idea and patch by email, in conjunction with him I adapted the algorithm to otclient, because how cool? We had lights working. However years passed and the algorithm still the same, it works. In a better world the lightning system would use vertex lightning, this would split the final drawn game map framebuffer into squares and each corner of the squares would have a color value associated with the intensity/color of light at the corner, then we would draw the framebuffer in a batch of squares with lightning colors associated with each square. This method would not decrease FPS even with huge amount of lights. Other idea would be to make per pixel lightning, for that using shaders, however would need a recent graphics card.

5. Reduce Texture Binding

As many of you was discussing, this would improved FPS. All sprites are loaded into textures, each time the client draws a sprite on the map it needs to bind the associated texture to that sprite, each binding downgrades the performance. In a perfect world if the whole game map could be drawn without texture bindings switches, even better, in a single batch, there would be a great increase in the FPS. But how to do that? This can be done with a technique called texture atlas, where all current visible and recent sprites are maintained in a few huge textures, thus much less texture switching would be needed, this would need a great refactor in how sprites are handled in otclient.

NOTE: I have ordered those insights in order of difficulty to implement in my opnion.

Many of those optimizations takes time and effort, creating and coding otclient for me was fun and great experience, however at some point I simple moved to doing something else and those improvements is left to the community. Even without those optimizations otclient works great (maybe not so on old machines), I my tests with common scenarios and on a decent machine I always had more FPS than tibia's official client .

attribute vec3 Vpos;

varying vec2 vert;

void main()

{

vert = Vpos.xy; //pass texture coord

gl_Position = vec4(Vpos.xy, 0.0, 1.0);

}varying vec2 vert;

varying vec3 vertex_pos;

uniform vec3 LPOS;

uniform vec3 LDIFF;

uniform float LRadius;

float n3ddistance(vec3 first_point, vec3 second_point)

{

float x = first_point.x-second_point.x;

float y = first_point.y-second_point.y;

float z = first_point.z-second_point.z;

float val = x*x + y*y + z*z;

return sqrt(val);

}

void main()

{

float dst = n3ddistance(LPOS, vertex_pos);

float intensity = clamp(1.0 - dst / LRadius, 0.0, 1.0);

vec4 color = vec4(LDIFF.x, LDIFF.y, LDIFF.z, 1.0)*intensity;

gl_FragColor = color;

}

Nice to see you here @edubart . Well i have a just few questions and little discuss about.

4. Lights doesn't have the best implementation.

It's can be (and should be) done with shaders.

something like that for example:

vertex:

frag:Code:attribute vec3 Vpos; varying vec2 vert; void main() { vert = Vpos.xy; //pass texture coord gl_Position = vec4(Vpos.xy, 0.0, 1.0); }

Code:varying vec2 vert; varying vec3 vertex_pos; uniform vec3 LPOS; uniform vec3 LDIFF; uniform float LRadius; float n3ddistance(vec3 first_point, vec3 second_point) { float x = first_point.x-second_point.x; float y = first_point.y-second_point.y; float z = first_point.z-second_point.z; float val = x*x + y*y + z*z; return sqrt(val); } void main() { float dst = n3ddistance(LPOS, vertex_pos); float intensity = clamp(1.0 - dst / LRadius, 0.0, 1.0); vec4 color = vec4(LDIFF.x, LDIFF.y, LDIFF.z, 1.0)*intensity; gl_FragColor = color; }

Drawing a huge amount of tiles.

Thats should be not a problem in 2D game. That is just becomes from your framework core.

1 question: Why your framework lack of this?

And other question - Why UIWidget has those implementation? UIManager - behaves itself like scene...

Imho, there is big TODO for refactoring. There should be dedicated Orthogonal Camera and also Scene. On my vision (maybe it's wrong, but i never seen other implementation) UI - is a Drawable component, that can hold controls. No less, no more. Those structure should be more obivious and less complex. i.e. there should be a Scene, where you attach UI Elements, Entities, Camera and etc for drawing. That makes much more easy to operate them all. For example, Camera calcs our proj. matrix (Rectangle) but NOT in Draw Loop.

On draw loop, you should just got results of our camera work. Also, Draw != Update. That is bad idea make them all in one loop. That is dramatically reduce our perfomance...

Finally, OTClient has a bad architect solutions for framework on Draw/Platform code (and partially UIWidget) and Client part of code problems hardly inspired on bad architecture solutions in framework itself (but sprites & texture atlases is another story)... Thats why i am going to make my own framework part of code, and probably i will make a github repo, with some pull-requests into OTClient in future

252 or 165 - no matter at all. Summary possible there is maybe near 3000-5000 drawable objects max. Hmm well, for 2.5D game it's should not be a problem to draw 5000 objects on screen. (that is not a same as draw 5k 3D models with ~1k polygons + Specular, Occlusion, Normal etc. textures with over9000 post-processing effects, right?)

Just as point of interest (really interesting for developers):

The Road to One Million Draws

best regards.

Lights

Lights was not implemented as shaders (aka per pixel lightning) because old graphics cards (there is a lot of players with old machines) doesn't support them. Of course with shaders would have the best implementation in terms of performance and appearance.

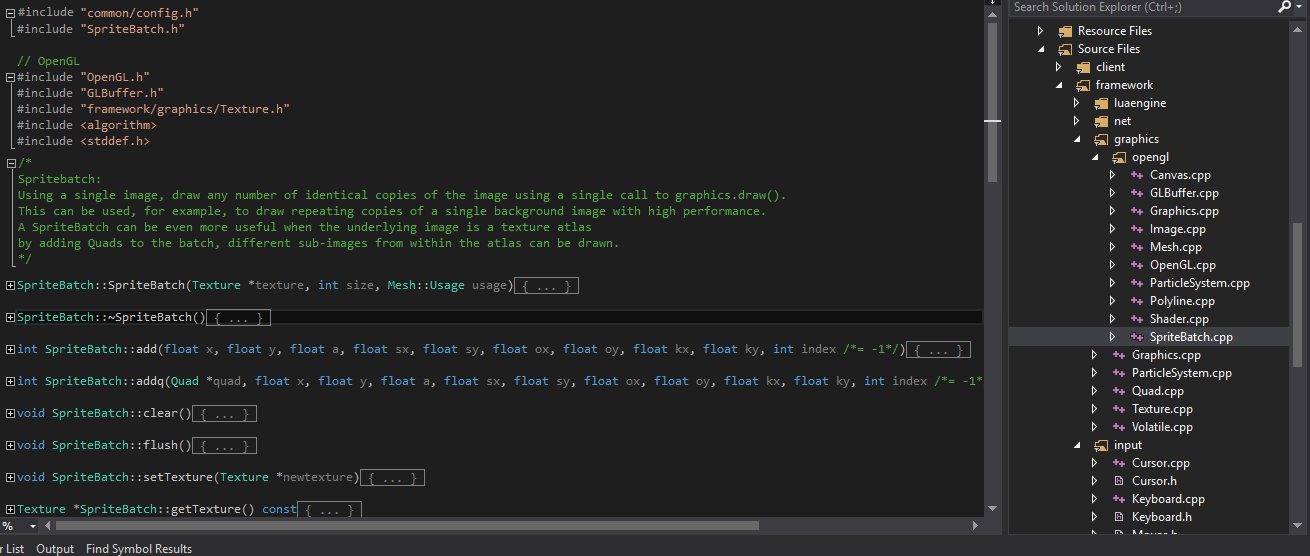

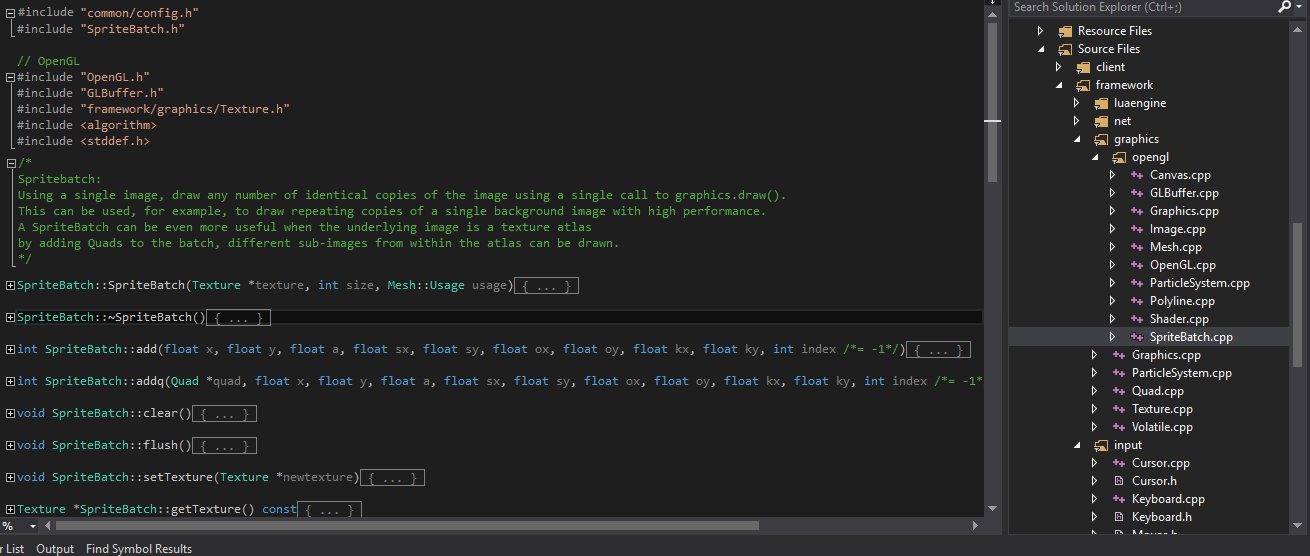

Sprite Batch

Batching is the hole grail to graphics optimization, there more you can batch the better. However is not easy to batch in tibia without a texture atlas, the tile ordering rendering is kinda complex and if you batch repeated sprites in different tiles you end up with rendering glitches, because there is a specific rule order for drawing tiles and its objects. If you had a texture atlas of sprites batching would be much simpler to do, because you would be able to render different objects in the same single batch, this way you wouldn't break the drawing order rule.

You might think that in 3D you can batch without taking care of the drawing order, that's because in 3D you have a depth buffer (z buffer) with depth testing, so when you can batch objects that are behind another ones with ease. But what if we added depth buffer to otclient? When you add depth buffer you gain another problem, translucent objects (alpha bending) will bug, unless you draw in the correct order (what is behind the translucent object first), so you would have to draw in the correct order or not allow translucent objects. But every sprite with alpha values needs alpha blending because of the 100% transparent borders, unless you use Alpha Testing, however alpha testing is deprecated in newer OpenGL (unless if you use a fragment shader with discard command, but that command slows down a lot the rendering)

Nevertheless some batching is done in otclient, but just for text drawing, you will see that is hard to find other cases that we could batch without texture atlas.

Drawing a huge amount of tiles

Drawing a lot of 2D objects is not a problem with recent graphics cards, however you always have to keep old machines with poor performance in mind. Just because recent cards can handle huge amount of drawing is not an excuse for drawing what you don't need. Much of graphics optimizations lies in throwing away what you can't see, then on what you can see you avoid recalculating and use caches if possible.

Scene/Camera

An architecture with scene and camera is indeed a better design to avoid recalculations done on the fly while drawing, this is a common approach for doing 3D games, now there is some UI systems using scene mechanism (like Qt Quick). However sadly otclient was not made that way, nevertheless much of the drawing in otclient's codes does caching to avoid heavy recalculations, and the calculations that are not cached in the drawing loop are generally lightweight and any CPU can handle. In the draw loop what consume most of the time is the OpenGL's drawing calls. Although there is a lot of operations with rects, points, floats and some logic before draw calls, they run pretty fast when you compare with the OpenGL's draw calls itself.

If you feel that those codes in draw loops are consuming too much CPU cycles there is a simpler way to optimize without refactoring everything, create another thread dedicated to drawing, and dispatch all OpenGL to that thread, this way you will have one thread completely dedicated to OpenGL rendering with no logic whatsoever while the main thread is dedicated with all the other stuff and dispatching those calls. This is how I manage to get the best framerates in some engines that I worked with, this way you also take advantage of CPUs with two cores or more, I always end ups with AFPS (application framerate, in the main thread) in orders of magnitude greater than FPS (rendering frame rate, in the opengl thread).

Also if you find any code that does heavy calculation in the draw loop, there is always a way to come up with an ideia how to cache it between frames.

Bad Platform Code

Coding both Win32 and X11-Linux platform code sucks, I wished when I started otclient that SDL2 existed. I first started otclient with SDL1.2 but SDL1.2 had so many problems that players wouldn't accept (couldn't walk using numpad, every window resize would delete all opengl context, and much more limitations). Today SDL2 is in a much better shape, even Valve uses to its games, using SDL2 the platform code would have much better shape and even make easier to port to other platforms (Android, iOS, Emscripten)..

Finally, I look forward to seeing your contributions and changes in otclient in a github repo, your ideas of scene and batching are good and done right could improve the client and its framework performance.